Craft – Inclusive Design, Design Thinking

Responsible innovation: The next wave of design thinking

Building your moral imagination to create a more ethical future

Every profession experiences waves of evolution. Moments in time where processes emerge from changing socio-economic, technological, or environmental contexts. Within Design, we’ve ebbed and flowed between waterfall, Agile, iterative, and inclusive design thinking, among others.

As the leader of the Ethics & Society organization at Microsoft, I believe that responsible innovation is the next critical wave of design thinking. Traditional design schools don’t teach ethics, and people often don’t consider ethics a design problem. Today’s technologies are exciting and present tremendous opportunities to augment human abilities across a range of scenarios. At the same time, technologies without appropriate grounding in human-centered thinking, or appropriate mitigations and controls, present considerable challenges. These technologies have potential to injure people, undermine our democracies, and even erode human rights — and they’re growing in complexity, power, and ubiquity.

As a profession known for prioritizing human needs, design thinking is vital for building an ethical future. Expanding human-centered design to emphasize trust and responsibility is equally vital. As stewards of technology, we realize that today’s decisions may have irreversible and destructive impacts. This creates an urgent need to minimize disasters, conserve resources, and encourage responsible technological innovations.

The stakes are high, so we shouldn’t relegate design thinking solely to those with “design” in their title. As a noun, design is a profession, but as a verb, it’s the act of considered construction. Within Ethics & Society, we employ user researchers, designers, engineers, psychologists, ethicists, and more — all of whom design. We think of ethics as innovation material, and this diversity is critical to help our product teams innovate. Working with engineering teams, we also create holistic tools, frameworks, and processes to use in product development.

Learning to exercise your moral imagination to consider the socio-technical implications of what you create is a bedrock process of responsible innovation. Like any muscle, a moral imagination takes time to build. We’re launching a Responsible Innovation Practices Toolkit to help facilitate this, a set of practices based on our learnings from developing AI technologies within Microsoft. We’re launching the toolkit today with the Judgment Call card game, Harms Modeling, and Community Jury, and we’ll be regularly adding additional practices.

We’ll be the first to admit that we don’t have all the answers. Consider this toolkit a humble approach to sharing some of our learnings that might help all of us design technologies with more intention.

Judgment Call: A game to cultivate empathy

Avoiding conflict is a natural impulse for many, yet responsible innovation often rests on our ability to productively facilitate challenging conversations.

Because games can help create safe spaces, we designed Judgment Call, an award-winning game and team-based activity that puts Microsoft AI principles of fairness, privacy and security, reliability and safety, transparency, inclusion, and accountability into action.

Judgment Call cultivates stakeholder empathy through role-playing and imagining scenarios. In the game, participants write product reviews from the perspective of a particular stakeholder, describing what impact and harms the technology could produce from that person’s point of view. Beyond creating empathy, Judgment Call gives technology creators a vocabulary and interactive tool to lead ethics discussions during product development.

This game is not intended to replace performing robust user research, but it can help tech builders learn to exercise their moral imagination.

Harms Modeling: An exercise to imagine real-world repercussions

Most product creators are keenly aware of technology’s ability to harm and earnestly aim to do the opposite. Still, technologies meant to increase public safety are often in tension with privacy, and mechanisms for democratizing information can also be used to manipulate and spread false information.

To identify and mitigate harmful effects, we need to learn how to look around corners and articulate what could go wrong. It’s easy to operate under a shared understanding, only to later learn that “harm” meant something very different to you than it did your coworkers. Or that your entire team wasn’t representative enough to fully imagine harmful consequences.

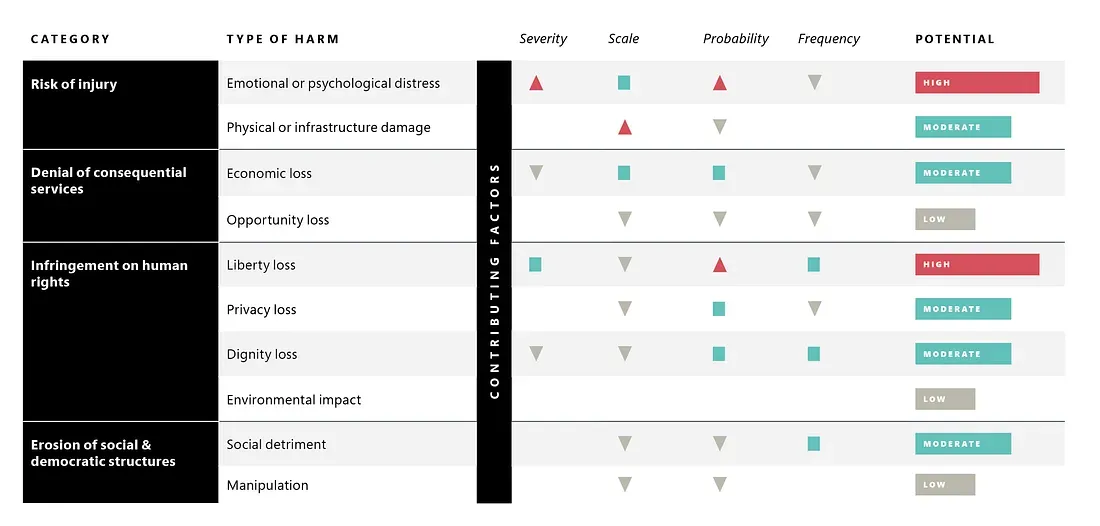

Because we can’t protect against something that we can’t clearly describe, a few years ago the team began exploring how we might create a shared taxonomy of harms to facilitate the important conversation of mitigations and controls. It’s more of an art than science, and it takes practice. The Harms Modeling exercise guides you in envisioning harms (like psychological distress, opportunity loss, environmental impact, etc.) that could stem from your technology along vectors such as severity, impact, frequency, and likelihood. It’s akin to threat modeling in security, which uses landscape assessments to understand the trust boundaries and surface areas of attack.

Threat modeling is a now a mature, standard component of software development, and we believe that harms modeling is its ethical equivalent, ultimately leading to responsible innovation.

Community Jury: respectful representation

However regularly we may exercise our moral imagination, it’s still our imagination, and therefore influenced by our lived experiences. To ensure diverse representation in our design processes by those impacted by our technology, our Responsible Innovation Toolkit includes Community Jury.

Adapted from the citizen jury technique, Community Jury facilitates discussion and co-creation between product makers and the people who a technology will directly and indirectly impact. Unlike a focus group or market research, Community Jury allows the impacted stakeholders to hear directly from subject matter experts on the product team, and product decision-makers can learn directly about a community’s values, concerns, or ideas around specific issues or problems.

The jurors chosen should represent the diversity of the community the technology will serve and consider factors like age, gender identity, privacy index, introversion, race, ethnicity, and speech, vision, or mobility challenges. On the product side, engineers, designers, and project managers should participate, as well as other relevant subject matter experts who can answer in-depth questions. A neutral moderator facilitates brainstorming and deliberation, and we recommend sessions last at least 1.5 to 3 hours. This gives jurors time to digest new or complex information and co-create solutions addressing their concerns with the product team.

Beyond an ethical imperative to give voice and agency to people whose lives a technology could potentially disrupt, it’s just good product design. Nobody knows the needs, constraints, risks, or considerations of a product better than the person it’s designed for. However brilliant an auto safety designer or engineer may be, they’ve probably never felt the ethical weight of a school bus driver responsible for the safety 30 kids.

Collectively creating an ethical future

Responsible innovation is one of the most important design challenges of the 21st century, and design thinking is critical for building an ethical future. No matter how big or small your scope of work seems, it’s part of a larger ecosystem with an unprecedented ability to impact individuals and societies alike. This makes elements like ethics and data science as intrinsic to a 21st-century design toolbox as storyboards or real-time collaboration files.

Judgment Call, Harms Modeling, and Community Jury are the first of many practices within our Responsible Innovation Toolkit. As we collectively expand what constitutes design and who should be considered a designer, we’d love to incorporate additional thinking and welcome your comments below.

Read more

To stay in the know with Microsoft Design, follow us on Twitter and Instagram, or join our Windows or Office Insider program. And if you are interested in working with us at Microsoft, head over to aka.ms/DesignCareers.

Outcomes over output: Designing shared cognition

How we are shaping systems that help people think better, not just type faster.

We can all be friends: Times New Roman vs Calibri

Explore how typography decisions really affect readability, accessibility, and brand expression.

Designing loops, not paths

How cybernetic loops are helping us turn “human in the loop” from a catchphrase into a design practice