Designing against the deaf tax

How we can flip the script on tokenism

“Your baby has failed” isn’t a phrase any parent wants to hear. Yet for parents born with deaf children, babies are labeled failures before even leaving the hospital if they don’t pass mandated hearing tests. The weight and impact of that label is never felt by the hearing population because in an audio-dominant world, there’s no such thing as a mandatory sign language test. It’s no surprise, then, that systems fail to design for what dominant culture does not see or value: the creativity, brilliance, and depth of deaf culture.

When my parents found out I was deaf, my mother cried, worrying that I would have to navigate the challenges she and my father endured. Like me and my sister, both of my parents are deaf. As author Isabel Wilkerson describes in her book about race, Caste: The Origins of Our Discontents, my parents experienced firsthand a world that is run by a caste system that is “about respect, authority and assumptions of competence — who is accorded these and who is not.”

From medicine to education, our societal systems are developed and implemented based on specific policies, attitudes, and institutional practices. It’s not biology that disables people in the Deaf or Disabled community; it is the mismatch between our abilities and the systems not designed for us. These mismatches place a disability tax on Deaf people at an early age. For example, fighting for accommodations like sign language interpreters for school, work, or healthcare; or having the courage to speak up in the face of discrimination.

As disability rights activist Haben Girma says in her book Haben: The Deafblind Woman Who Conquered Harvard Law, “They designed this environment for people who can see and hear. In this environment, I am disabled. They place the burden on me to step out of my world and reach into theirs.”

Neither of my parents learned sign language until I entered elementary school because their [hearing] parents were told that they had to ‘talk’ (e.g.: speech) to succeed in life. When a child endures such language deprivation, they don’t get adequate exposure to other forms of effective communication, thus delaying their cognitive, social, and emotional development.

But despite the exclusion, ridicule, and language deprivation my parents faced, they were never without hope. Ultimately, having my sister and I would give new meaning to my parents’ personal histories and broaden their perspective in profound ways. As a born failure, I grew up in a world of mismatches, but as desktop computers became more readily available, those mismatches would lead me down a path of using my lived experience to break barriers in society, design and technology.

As Dr. I. King Jordan, the first Deaf president of Gallaudet University, says, “Deaf people can do anything hearing people can, except hear.”

Technology cracks the door open

Growing up, there weren’t really computers in classrooms and since most kids my age didn’t care for them, I was one of the first kids in my area to start using a computer. Since I had limited ability to communicate with my classmates, computers helped me fill the time. My first computer was a PowerMac G3 Desktop, gifted by a cousin who ran his own design firm. I tinkered with different programs and video games like Backyard Baseball (1998), and the door to my future slowly opened.

I got my second computer in 7th grade, because my parents were trying to be creative in how I would keep up in class with note taking. Pencil and paper weren’t working for me. My eyes were focused on my interpreter, the whiteboard, and just taking everything in. Couple that with the fact that teachers don’t stop speaking just because you’re looking down to write, and it’s not a surprise that I missed a lot of information. Typing notes was much faster than writing, so my school provided a PC and that widened the doorway to technology. It was a Dell Inspiron 8100 with Windows 2000 that was built like a tank, and it was really heavy! I spent a lot of time on that computer and surfed on the Internet quite often to learn just about anything. Ask Jeeves was my best friend, eventually replaced by MSN search and Google.

Access to information was my lifeline to learning about the world, and I did it through technology because I didn’t receive information from conversations around the room. This wasn’t from lack of effort. I took over 15 years of speech training and learned to speak quite well to try and meet people halfway. The problem was, when they responded, I could only partially understand, nodding my way through communication mismatches. This led to shallow interactions, and pretending didn’t feel good because there was always something lacking — a true human connection — when people were not willing to meet me halfway. These days, I rarely speak. Sign language gives me 100% communication, and I also use a notetaking app with speech-to text on my phone to interface with folks who don’t know sign language.

Using a Deaf lens at Microsoft

As a designer at Microsoft, I often think about my lived experience and bringing empathy to the mismatches I face daily. I may be an avid user of technology, but there is plenty of room for technology to grow and fill accessibility gaps. I advocate for accessibility not because I want to, but because I have to. Design should be about how Deaf people experience the world, anticipating every need, and shifting to prevent issues later in the product cycle. Differing lived experiences inform our unique perspectives, creating an exchange that can open opportunities. Overlooked problems get discovered and the approach to solving them become very mindful and effective.

As a profession, design has evolved tremendously in the past few years, moving from the aspirational towards the functional. UX mismatches in the systems we use still exist because, while they’re made based on a very wide set of users, they still lack all of the necessary elements for me to be accommodated. To solve these problems, we sometimes need to focus on “design for one and solve for many.” In other words, when we focus on designing for a single problem (or disability), it helps us to navigate the problem space writ large using empathy, our lived experiences, and leveraging co-design principles.

Part of what catalyzed many recent changes in design thinking was the 2020 pandemic and rapid transition to remote work. Deaf folks quickly found that video conferencing was the great equalizer because for once we were on equal footing with our peers. Meetings were more mindful and easier to track because people couldn’t talk over each other. This made work easier for Deaf people as others modified their behaviors to ensure clear audio or taking turns when speaking in meetings. Deaf people could also rely on captions to figure out who was speaking or catch the dropped information that interpreters may miss due to accents or things being lost in translation. For the first time, millions of people were experiencing Deaf gain. Communication and human connection through technology was improved by the Deaf community because anyone could use those features (including folks forced to mute their audio thanks to a screaming kid in the background).

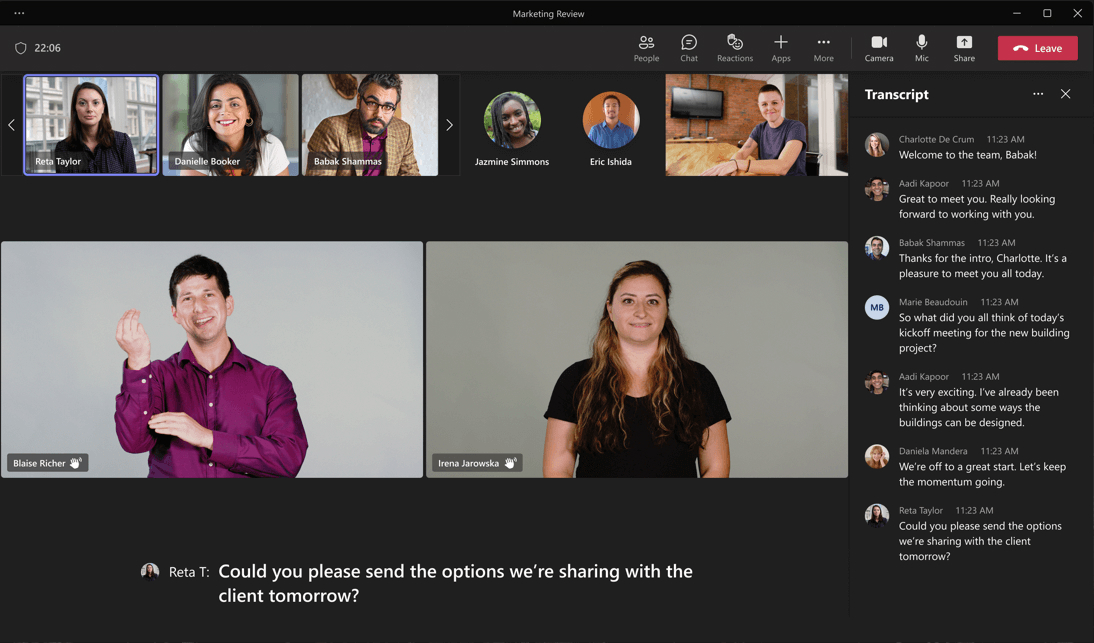

UX for Sign Language View in Teams. The D/HH experience in Microsoft Teams has driven the development of accessibility features like Sign Language View, designed specifically for those who rely on sign language for communication. Through a collaborative, co-design process with the D/HH community and sign language interpreters, we have developed features that ensure sign language users can perform at their best in any virtual collaboration setting. Key enhancements — such as the ability to manage and prioritize a list of signers, improved video quality for clearer sign language visibility, and simplified meeting controls — empowering D/HH users to engage quickly, fully and confidently.

The creation of Sign Language View in Teams

One shortfall of this new way of working, however, was the existing caption solutions. It was painful for my Deaf peers and I to have to turn on captions with each call. Every time, you had to look for and prioritize the interpreters, open the chat window, and then finally be ready to participate in meetings. This design mismatch led to two Deaf employees (myself and a coworker) creating an UX proposal for Microsoft Teams that would be more accommodating for Deaf users. Sign Language View was born out of a mismatch that we as employees faced daily, to the point that it was weighing on our disability tax. These days, I can now join meetings with less friction than I had before — but there are still more doors to open and work to do!

With AI, it’s truly an exciting time in tech and so much is yet to be determined. This makes it critical to enter the space with curiosity, humility, and nuance. People in the Deaf and Disabled community operate outside of societal norms, attuning us to gaps often missed by others. That creates a very sensitive, nuanced lens, and curiosity is also often at play. When your ability to succeed is determined by how creatively you can hack a system that doesn’t work for you, it necessitates innovation. In totality, this gives us a lens to approach problems in ways that may be more holistic than traditional design best practices.

Co-design is great, but representation is an even better way to ensure people with lived experiences work directly to solve problems for their own communities. Ultimately, this leads to more equitable products for everyone and potentially more enjoyable ones, too. For example, people often see sign language as a lesser form of communication, but it’s highly expressive and an integral part of our cultural identity. People often want to travel to other countries to enrich their own lives by better understanding different ways of living. Living without sound is another way of experiencing life, and Deaf culture has its own social beliefs, behaviors, traditions, art, and values.

What if the 90% of deaf children born to hearing parents were told that they now had the chance to dive into a culture rich with language and expression, rather than being given the “bad news”? I think it could help erode the disability tax over time, leading to products and systems that are truly meant for all.

Header imagery by Karan Singh.

Read more

To stay in the know with Microsoft Design, follow us on Twitter and Instagram, or join our Windows or Office Insider program. And if you are interested in working with us at Microsoft, head over to aka.ms/DesignCareers.

The em dash conspiracy: How pop culture declared war on literature’s favorite punctuation

AI, Emily Dickinson, and the War on the Em Dash

Design for cultural diversity: a perspective on global experiences

Designing for diversity isn’t just about accessibility or localization—it’s about building bridges through shared understanding.

Prioritizing inclusion over convention: Rethinking how we design packaging

The Microsoft Packaging & Content team just released “Creating Accessible Packaging: An Inclusive Design Guide,” available for all.