Co-Create, Iterate, Keep the Human at the Core

Welcome back to Think First: Perspectives on Research with AI. Last time, Savina Hawkins revealed how AI is transforming every stage of the research journey at Anthropic. Today, we’re hearing from Chelsey Fleming, Research Lead for the Gen Media team at Google Labs, where she explores the intersection of generative AI, social sciences, and the arts.

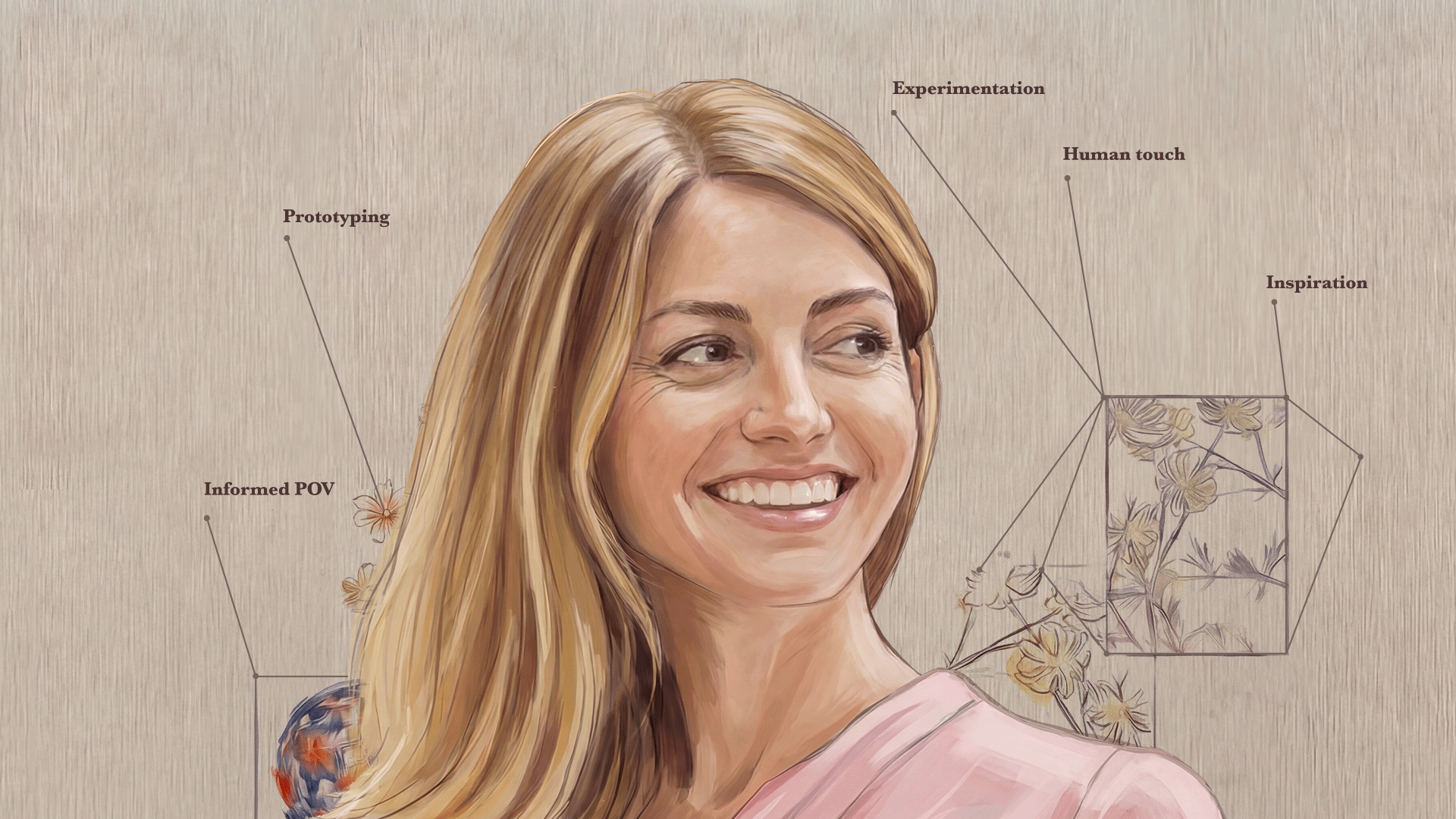

With a PhD in archaeology and a background in museum curation, Chelsey brings a unique perspective to technology, focusing on how culture and institutional influence shape visual preferences across time. Since joining Google in 2017, Chelsey has worked on zero-to-one research for emerging AI products, and now leads research for Labs’ Gen Media team, which includes video, image, music, and games. Her approach to AI in research emphasizes experimentation, efficiency, and maintaining a strong human touch—advocating for thoughtful integration of AI tools to augment, not replace, the researcher’s voice. Chelsey is known for her commitment to co-designing with real people, and her belief in using AI to tell better human stories in research.

In our conversation, we explored how Chelsey approaches integrating AI into UX research, balancing efficiency, creativity, and a distinctly personal perspective, while navigating opportunities and challenges of emerging technologies.

From Archaeology to AI: Chelsey’s AI Origin Story

As someone who’s been researching AI products for several years, Chelsey has gradually experimented with leveraging AI in her own research practice; over time, it has become a staple in her workflow.

“I would say maybe five or six years ago, I started thinking about bringing it into my research practice. But what that looked like five or six years ago is really different than how I use it now, obviously. Early on, I had access to things that probably many people did not. But I remember when our team was experimenting with low–code/no–code prototypes, for example, some of the early LLM experiments, I remember thinking about ways that I could bring those into my research.”

Protecting the Human Voice in Research

Today, Chelsey uses AI in both lightweight and more advanced ways—summarizing qualitative notes, organizing data, and even creating podcasts of her reports to gain new perspectives. However, she draws a clear line:

“I have a very strong point of view about the degree to which I let AI touch my own notes. I feel pretty strongly that I should be giving AI the source material, not using the AI to generate the source material. I preserve a very sacred human and personal touch with what I put out to my team so that I have confidence in it.”

I preserve a very sacred human and personal touch with what I put out to my team so that I have confidence in it.

She avoids sharing AI-generated summaries or insights with her team, preferring to ensure her work is efficient, readable, and trustworthy.

“I don’t share AI summaries. I don’t use AI to generate insights, not just because I don’t think they’re very good insights, but also because I think they’re painful to read. I really don’t enjoy reading things that are lengthy LLM word salad.”

While AI can automate routine tasks, Chelsey is wary of using it for core research outputs:

“I don’t really want AI to write my research reports for me, but I do want it to file my expense report and submit all my receipts for me.”

Prototyping and Efficiency: Where AI Delivers Value

Chelsey and her team increasingly leverage AI tools that enable rapid prototyping, even for those without coding backgrounds.

“My team and I have been experimenting with tools that enable you to prototype on your own, which is really cool as a researcher, at least from my perspective, because I don’t have a coding background at all, so being able to use some of those tools to spin up a quick prototype—not that I expect anyone to build it—but so that it can help you express your idea. I think it is a pretty powerful shift in capabilities.”

AI also accelerates her workflow: she uses LLMs to quickly summarize academic articles, helping her decide what’s worth reading.

“So, like every researcher, I wade through a lot of Google Scholar articles, and I’m often trying to figure out if it’s worth my time to read. So, I’ll dump them into Gemini and have it tell me what the arguments are, and then I’ll choose which ones to read from there. So yeah, it’s definitely making me more efficient. Not that efficiency is the sole goal we should strive for.”

Integration Friction and the Limits of AI Tooling

Despite the promise, Chelsey is candid about the friction points. She observes that researchers often create workarounds to bridge gaps between systems.

There’s a very big overarching friction across many if not most AI tools right now, which is integration with other tools and processes.

“There’s a very big overarching friction across many if not most AI tools right now, which is integration with other tools and processes. A lot of people that I interview, and myself included, you see people coming up with these weird workarounds to get things to play nicely together. And I think we’re increasingly seeing that move in the right direction, but it still could be a lot quicker and easier than it is.”

It’s a reminder that while AI capabilities accelerate, the limited connective tissue between tools will continue to create bottlenecks.

Human Touch vs. Automation: Knowing Where to Draw the Line

When it comes to human-in-the-loop research, Chelsey believes in a nuanced approach:

“I think it’s all a game of degrees. Like how important is this to you? How much do you care about how accurate or personally reflective this information is? I am of the mind that every single thing I share out with my team should be of a quality that I can stand behind it. And if I’m not confident in it fully, that’s OK. But it needs to be clear why I’m not confident. And AI is still a black box where you can’t interrogate your data or ask why. Should you really be sharing that data? I feel very uncomfortable with that.”

For Chelsey, AI is best suited for low-stakes tasks; for research insights, she wants to ensure traceability and confidence in the data.

“I think there is a lot of discussion around AI where people seem caught up in the magic, and I think that can lead to some pitfalls. So, I feel pretty strongly that I should be starting with my own source material when I’m using it for efficiency. Now there are exceptions, for instance, a quick AI slide template or something, that’s relatively low-hanging fruit. That feels fine. But when it comes to research and putting that information out there and standing behind it, I want to make sure I know why we’re saying what we’re saying. And if someone comes to me and says, ‘where did this insight come from?’, I can answer that question.”

Beyond Efficiency: AI for Inspiration and Creativity

Efficiency isn’t Chelsey’s only goal. She values AI for its ability to inspire new perspectives and foster creativity. Collaborating with artists and creatives, she sees AI as a tool for rapid visualization and concept development:

“I work in Gen Media, so there’s efficiency, creativity, and inspiration happening there. We collaborate really closely with a lot of artists and creatives and experts in their domains, and I see them doing really incredible, cool things with these tools…a lot of visualization, bringing an idea to life, bringing an early-stage concept to life quickly or with very little resources can be also a really powerful use.”

Trends and Cautions: The Synthetic Data Debate

While other researchers, like Josh Williams, have started to adopt synthetic users, Chelsey remains cautious about synthetic data—whether personas, users, or survey respondents.

I’m not opposed to all synthetic data, but I don’t think it’s data and it’s certainly not magic.

“I’m not opposed to all synthetic data, but I don’t think it’s data and it’s certainly not magic. I guess it just depends on how confident you want to feel in your predictions and how much you want to stand behind the information that you’re sharing…’Synthetic data’ encompasses a wide range of different types of data and applications, and it’s important to evaluate each and understand applications and limitations. It’s neither ‘all good’ or ‘all bad,’ but it does require additional context.”

She emphasizes the importance of understanding how models are trained and the degree of confidence one can have in synthetic outputs.

“I think one of the reasons I tend to be cautious is it’s not always clear how models are trained for synthetic data applications. There are totally fair and often ethical reasons to use synthetic data. But it’s important to train on ethically fair source material as opposed to trying to take material from elsewhere.”

Chelsey’s advice for the skeptics: experiment to develop your point-of-view.

“I’ve made a point to experiment with synthetic data because I’m skeptical of it. For any researcher who’s skeptical, it’s worth experimenting. It helps you flesh out a POV. It helps you understand the limitations and constraints. For example, my incredible student researcher and I ran a study where we compared a synthetic data set with human data to see where it varied, and it varied significantly enough that I would have real hesitation around just leveraging that synthetic data.”

Looking Ahead: AI’s Role in the Future of Research

Chelsey is hopeful about the future, envisioning a shift away from novelty and jargon toward meaningful research:

“I hope we’re starting to get over the novelty factor around some of the AI capabilities that cause us to have too much confidence. I mean AI is an incredibly powerful capability that will continue to enable serious advancements in science and research moving forward, but I hope that we head in a direction where we start to value the capability, but also lean into AI to help us tell better human stories in research.”

I hope that we head in a direction where we start to value the capability, but also lean into AI to help us tell better human stories in research.”

She sees AI as a powerful enabler for infographics and visualization, but like Rodrigo Dalcin, she insists that the foundation must be strong human stories, grounded in real conversations.

Advice for Researchers: Experiment and Co-Create

Chelsey’s advice for teams starting with AI is simple:

“Experiment. Experiment so that you understand what’s useful for you and what’s not… Experimentation is pretty low stakes these days. It’s pretty low cost. That’s a huge luxury. And so, experiment actively so that you can give yourself the space to learn, the space to be critical. Develop that point of view on what you think it is good for you.”

She also encourages researchers to co-design and co-create with real people, not just within the “AI Twitterverse,” but with artists, creatives, and everyday users.

“It’s very important not to over index on that segment in our space and to work with artists and creatives and regular people.”

As the boundaries of research and technology continue to blur, Chelsey’s journey reminds us that the true power of AI lies not in replacing the researcher’s voice, but in amplifying it. By embracing experimentation, maintaining a critical eye toward synthetic data, and prioritizing co-creation with real people, we can harness AI to tell richer, more authentic human stories. The future of research isn’t about chasing novelty—it’s about leveraging AI thoughtfully to deepen our insights, foster creativity, and ensure that the human touch remains at the heart of every discovery.

Next up: Don’t miss our conversation with Rose Beverly, Senior Staff AI UX Researcher at PayPal, as she shares her multidisciplinary approach to integrating AI in research and her perspectives on the future of intelligence and work.