Product – Inclusive Design, AI

Centering sign language in AI and design

A Deaf-led approach to making Sign Language a core principle of inclusive design

As long as we have Deaf people on Earth, we will have Sign.

What if Sign Language was the norm? Everywhere you go, talking hands fill the air. Actors speak through gestures in every movie and TV show. During video calls at work, your colleagues use Sign Language to communicate with ease but when you speak aloud, nobody hears your voice or sees your mouth move. You are not elevated to and identified as the active speaker, so no one can see you talking. It’s like you’re invisible. Their gestures are transcribed as contributions to a productive meeting while your ideas are miscredited since your words go undetected.

You’re trying to belong in a digital space that doesn’t see you.

How would that make you feel?

Elevating Sign Language in the digital age

Seven hundred million Deaf people worldwide are disabled by a world that was not built for them. While not all Deaf individuals use Sign Language, for those who do, it is a rich, visual language built on motion, depth, dimension, and expression. Our hands sculpt layered meaning, silent to many but never without voice. Like everyone else, Deaf people seek lives full of light, color, and sensation. Yet in the digital spaces where we work, create, and connect, support for how we communicate is limited at best and often nonexistent. Bridging that gap demands thoughtful, sustained action rooted in a deep understanding of what it takes to build truly equitable and comparable experiences.

In tech design, accessibility has often been an afterthought, with assistive technologies developing reactively rather than proactively included from the beginning. Accessible design has its roots in activism, like the powerful “Capitol Crawl.” On March 12, 1990, around 60 people left their wheelchairs behind and crawled up the steps of the U.S. Capitol, highlighting the building’s inaccessibility. Their protest helped spur the passage of the Americans with Disabilities Act (ADA)—a landmark law that made physical accessibility both a legal requirement and a civil rights issue. The ADA started the conversation, giving people the language, rights, and leverage to demand better.

As technology took off in the 2000s and 2010s—with the rise of the web, mobile apps, and digital services—it became clear that digital exclusion could be just as harmful as physical barriers. Inclusive design gained momentum as digital product makers realized accessibility had to go beyond checklists and compliance. By centering lived experiences and co-designing with people with disabilities, disability is now more widely accepted as a dimension of human diversity—not just a problem to fix. This marks a shift from focusing only on compliance to unlocking new design paradigms that use empathy and collaboration to innovate.

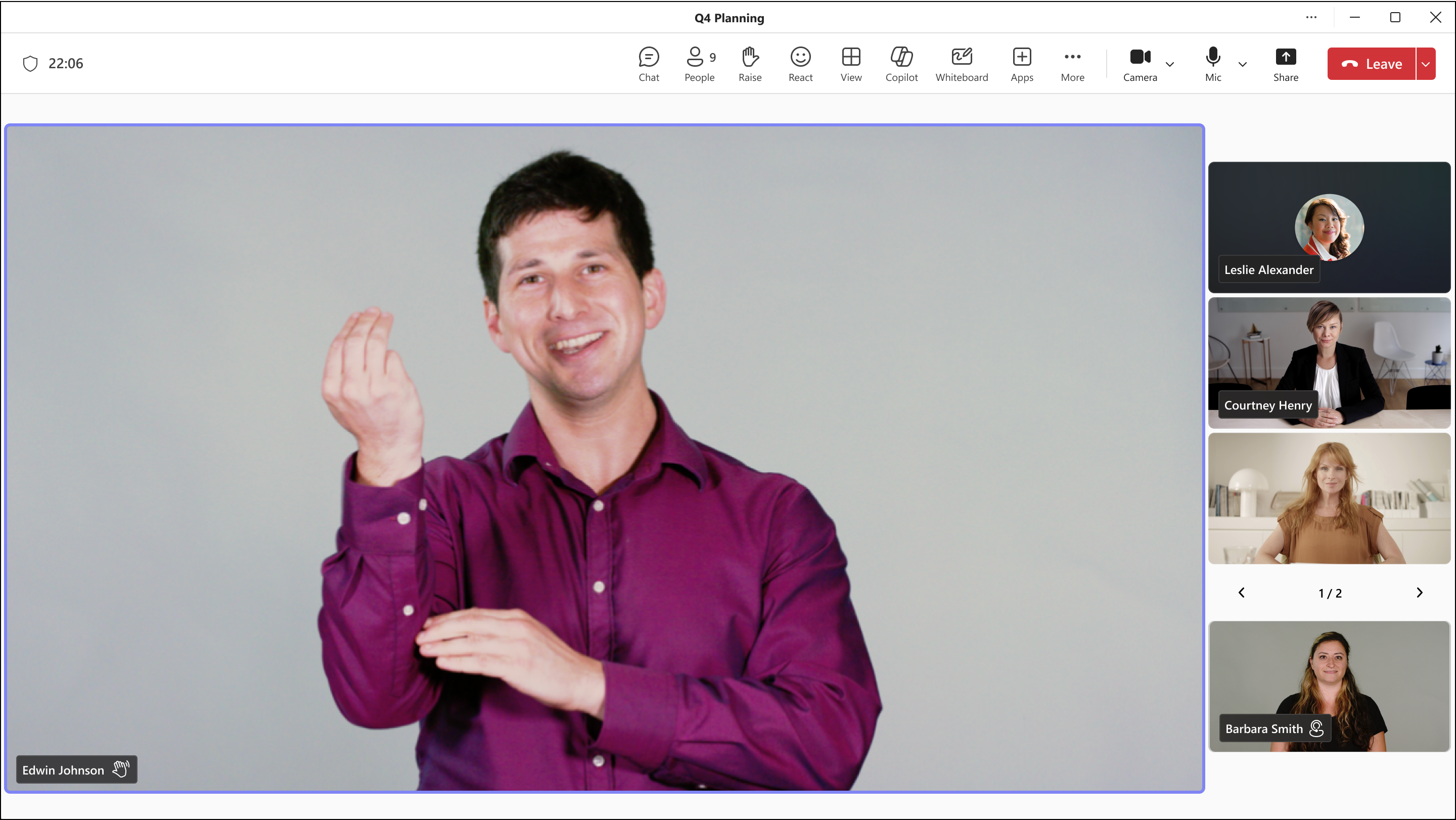

Introducing Sign Language Detection in Microsoft Teams

At Microsoft, we have a long history of supporting linguistic and cultural inclusion, from Indigenous languages like Cherokee to the West African script ADLaM. But this commitment is not only about preserving written and spoken languages. It also means recognizing Sign Language as a full and equitable mode of communication, embracing it as an authentic part of our global language landscape, and exploring how we can more fully integrate Sign Language into the products and experiences we build.

As we conclude our celebration of Disability Pride Month and mark the 35th anniversary of the ADA, we are proud and excited to announce that our new Sign Language Detection feature is now available as part of the Sign Language Mode in Microsoft Teams.

Nothing about us without us

To effectively build inclusive features, we believe in shifting accessibility left by embedding it from the very beginning of the design process. This means going beyond the bare minimum of designing compliant experiences and empowering people with disabilities to lead the way in crafting features driven and shaped by lived experience. Firsthand perspectives on the challenges being addressed drive innovation, challenge assumptions, and lead to solutions that are more grounded in reality, inclusion, and humanity. Ultimately, that creates greater impact for all communities.

That belief is at the heart of our initiative. We are a collective of Deaf full-time Microsoft employees working across the company as architects, designers, engineers, program managers, and technical support staff. In addition to our core roles, we are leading an AI-driven, Deaf-led initiative to shape how Microsoft builds Sign Language experiences. By embedding our voices throughout the process and partnering with product teams across the company, we are working toward a future where digital communication is intentionally built for and truly includes everyone.

When we first started in 2019, we quickly realized that before we could truly innovate for the Deaf community, we needed the underlying technologies to support the kinds of experiences we envisioned. That same year, we hosted the Sign Language Research Workshop, bringing together experts from across disciplines to explore the challenges and opportunities of using AI for Sign Language recognition, generation, and translation — fields often researched in isolation. The workshop laid the foundation for lasting collaboration and future breakthroughs. One of its key outcomes was The FATE Landscape of Sign Language AI Datasets: An Interdisciplinary Perspective, an award-winning paper that would create momentum to help achieve our following milestones.

From the pandemic to product breakthroughs: Sign Language View

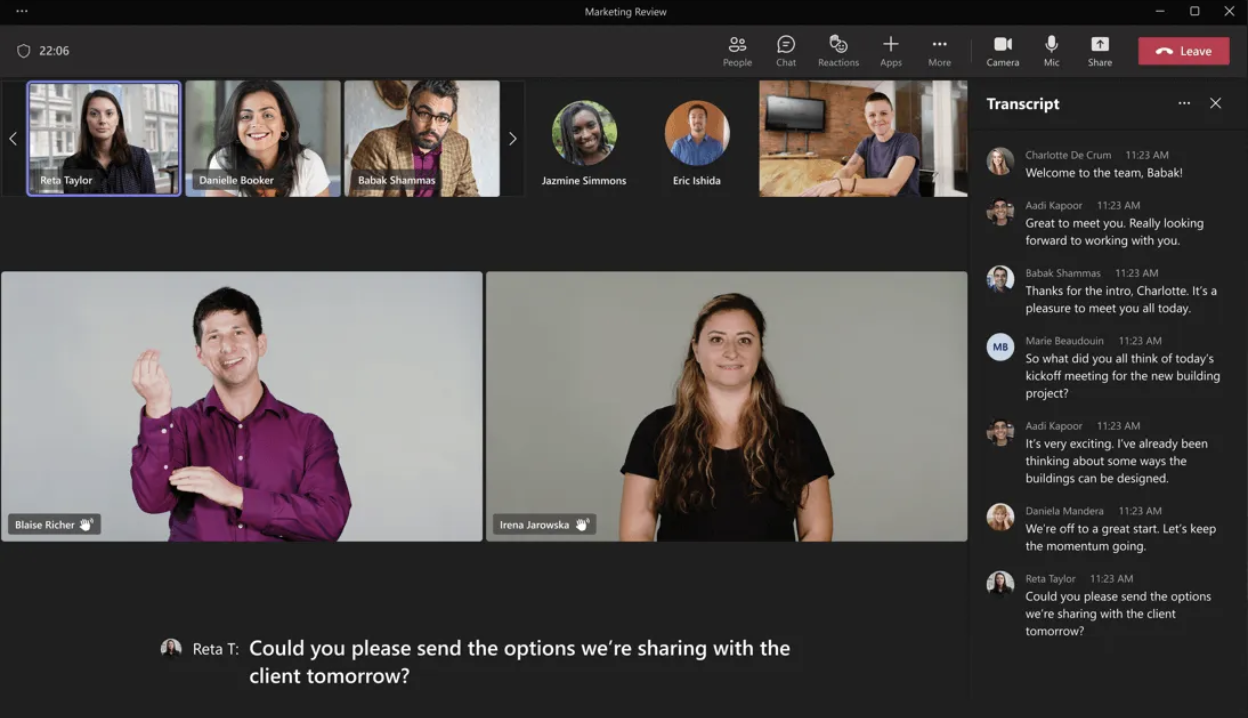

While many adapted to remote work at the beginning of the pandemic, for Deaf employees, the shift to virtual meetings exposed how poorly existing platforms supported Sign Language. Signing didn’t trigger active speaker detection, so video feeds of signing participants stayed minimized and out of view, even when they were actively contributing. Interpreters were present, but the platform prioritized their audio over the signer’s visual language. Other meeting participants often assumed the interpreter was the one speaking and referred to them by name, unaware that the message originated from the Deaf person. Captions and transcriptions reinforced this misattribution by labeling the interpreter as the speaker, further erasing the signer’s presence in the conversation and in meeting artifacts. Keeping signers visible required constant manual effort — searching, pinning, adjusting layouts, and managing secondary connections — just to stay involved. Layouts reset. Preferences didn’t persist. It was a mess.

These challenges weren’t just technical. They shaped how we showed up in meetings, how we were perceived, and whether we could fully participate. We needed a solution that recognized the dynamics of Sign Language communication and made Deaf presence visible by design. This led to the creation of Sign Language View in Microsoft Teams, a layout that allows Deaf and hard-of-hearing users to keep signers visible, regardless of who is speaking. It marked a shift in thinking. Visibility should not have to be earned or managed manually. Just like standard curb ramps to buildings or sidewalks, accessibility should be built into our digital experiences.

And though Sign Language View improved the experience, some of the bigger issues persisted. Signers still weren’t acknowledged by the platform in the same way as speakers —and we put pressure on ourselves to fix this.

Developing our own AI model for Sign Language Detection

As AI continued to evolve, we saw a powerful opportunity to ensure the Deaf community was not left behind. Over the past two years, we have worked to define clear, practical milestones rooted in today’s technology, while keeping our eyes on a future where AI fully understands and supports Sign Language as a natural and equal mode of communication.

We know that Sign Language generation, recognition, and translation technologies across the industry are not yet reliable enough for the Deaf community to fully trust. But we also saw the potential for these tools to start making a difference now. One of the most immediate and impactful areas was detection. We knew that if a platform could detect someone signing, it could begin to treat visual language with the same legitimacy and responsiveness as spoken language. That recognition could unlock entirely new experiences built around the needs and contributions of our community.

In partnership with internal research teams, we developed a lightweight, state-of-the-art AI model capable of detecting signing activity in real time. We integrated this model directly into Microsoft Teams, enabling the platform to recognize when someone is actively signing and automatically elevate them to active speaker status, just as it does for those speaking verbally. We see this as a small but meaningful breakthrough that brings us closer to an equitable meeting presence and helps ensure that signing participants are not overlooked, but instead seen, acknowledged, and centered in the flow of conversation.

Many milestones of the future

Our north star is to design a comprehensive Sign Language generation, recognition, and translation platform that will enable first and third-party Sign Language experiences worldwide. Sign Language detection in Microsoft Teams is just one step in our ongoing journey to make communication more equitable and accessible. We are building additional features, guided by the principle that Sign Language must be treated as a first-class modality in our products. The path forward is clear. It requires continued collaboration with the Deaf community, a commitment to inclusive design, and the belief that innovation should serve everyone. We are proud of how far we’ve come, and we are even more excited about where we are going next. Stay tuned. <3

Sign Language Mode is now officially available in Microsoft Teams.

Read more

To stay in the know with Microsoft Design, follow us on Twitter and Instagram, or join our Windows or Office Insider program. And if you are interested in working with us at Microsoft, head over to aka.ms/DesignCareers.

When AI joins the team: Three principles for responsible agent design

A practical guide to build agents that stay differentiated, intent-aligned, and bias-resistant

The new Microsoft 365 Copilot mobile experience

How we redesigned the Microsoft 365 Copilot mobile app to create a workspace built around conversation, dialogue, and discovery.

The new UI for enterprise AI

Evolving business apps and agents through form and function