AI – Design Thinking – UX/UI

Behind the design: Meet Copilot

When the system is the product; on crafting the next generation of user experiences

After years of steady but incremental innovation, technologies are emerging that will generate a true sea of change. At first glance, large language models — the technology behind next-generation AI like OpenAI’s ChatGPT or DALL-E — seem like the missing piece that will help user interfaces adapt themselves to humans. It’s amazing, nascent, and full of possibilities. But, unlike the AI that we’re used to where autocomplete is used everywhere from custom news feeds to email, this new technology moves us from AI on autopilot to AI as copilot. It’s a very different mental model requiring wholly new methods of interaction, and our design and research community has been diving deep to learn and understand as much as we can.

For Microsoft Design, we knew we had to have a perspective on how to create a design system that helps the technology be used in a way that serves humanity, furthering human agency and collaboration. We also knew we needed to create agile, flexible design and engineering processes because with technology this new, constant iteration is needed as customer feedback comes in and we continue learning.

We introduced Copilot, our new AI experience combining large language models with M365 apps and data in a powerful conversational UX, showcased in a film exploring its UX/UI elements.

On March 16, we announced Microsoft 365 Copilot — your copilot for work. Copilot combines the power of large language models (LLMs) with your data in the Microsoft Graph and Microsoft 365 apps — your calendar, emails, chats, documents, meetings, and more — to turn your words into the most powerful productivity tool on the planet.

Copilot is a pioneer in conversational UX, a new frontier of user interface design as paradigm-changing as the first touchscreen devices. We believe it has the potential to transform how people interact with and use technology, making things easier and opening new possibilities. Its power, however, must be framed by simple, powerful UX that’s rooted in ethical considerations. With Copilot, we rethought everything from visual identity to interaction design as we aimed to create a truly valuable user experience.

To truly unlock this technology’s potential, we must challenge our current perceptions of technology. Many of our subconscious ideas about AI have been shaped by movies and books. They often present a binary choice between humans or machines, promoting notions of total automation where machines do everything for us. But the true power of next-generation AI lies in its ability to augment human potential. This is a driving principle of our Copilot experience, where collaborative back-and-forth interactions with AI ensure you’re always in control, steering Copilot toward your personal goals. As designers, the UX we craft must foster an understanding of this relationship, helping people adopt new mental models that will move everyone forward in positive, productive, and ethical ways.

These new mental models must be rooted in what we call “appropriate trust,” which is when people understand the technology’s limitations and capabilities and are empowered to use it in responsible ways. While Copilot can be amazing at helping with tasks like generating content or facilitating group collaboration, it may also generate answers that are imperfect or need refining. The more people understand things like this, the better they’ll get at using it.

On a personal note, I’ve found that Copilot doesn’t just help me communicate more clearly, but through the collaborative process of working with it, it’s also taught me how to better communicate. It’s helped me grow and, as a designer, it also rekindled my creative spirit because of how dynamic a medium it is. Even when it gets things wrong — which it does, and it’s critical to convey that — the process of reading and refining the output can spark fresh ideas, inspire me, and affirm what I know to be right. That’s why we’re striving to ensure people always feel empowered and in control.

We often talk about designing in the open here at Microsoft, and it’s hard to think of a more profound context in which to do this. With this new technology, new capabilities and uses are still emerging; as product-makers industry-wide begin building with it, we felt it vital to share the process and frameworks underpinning Copilot so that we can collectively learn, grow, and evolve.

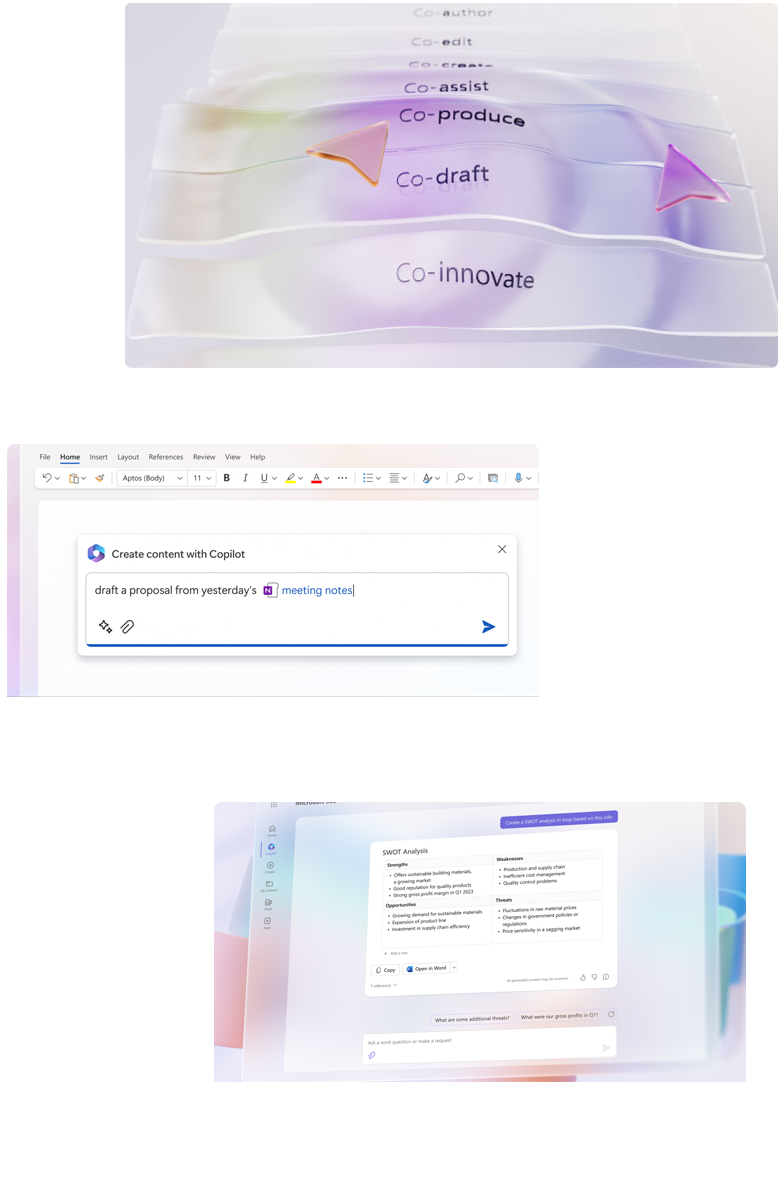

AI for All Altitudes

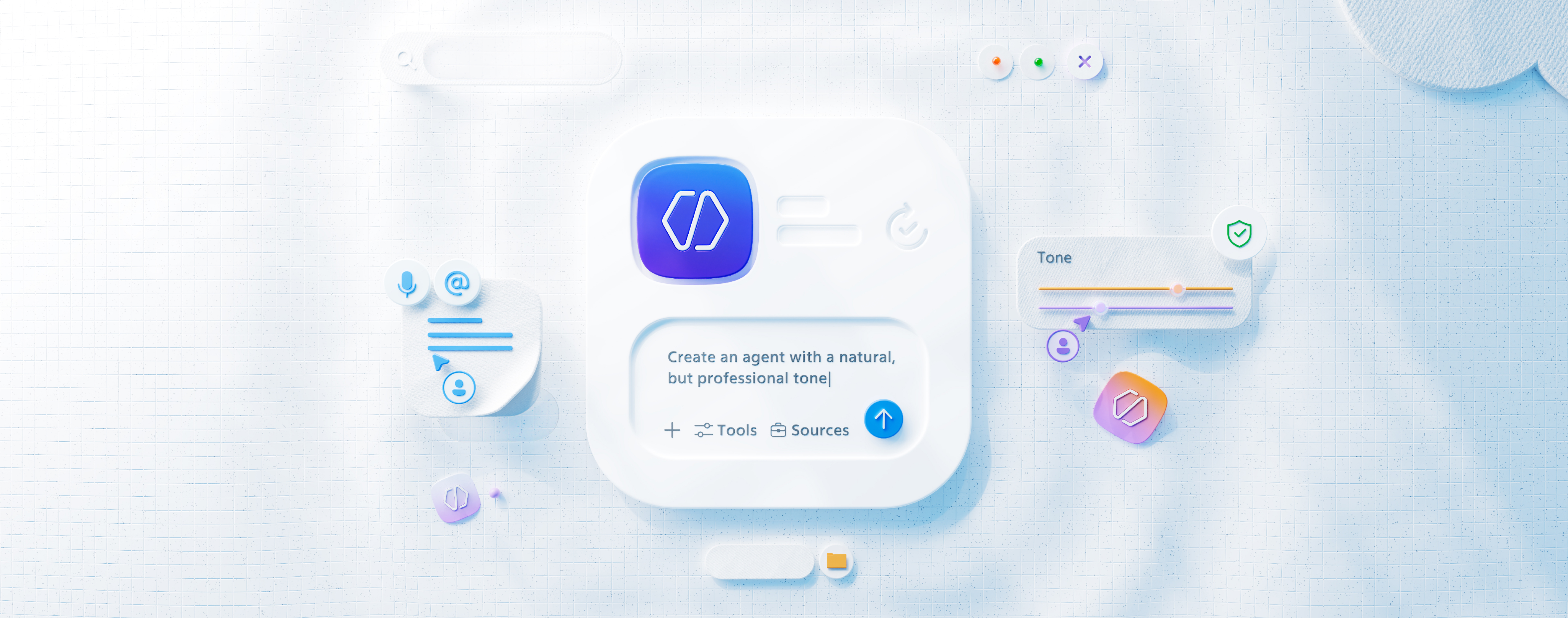

Productivity unfolds at multiple altitudes; sometimes you’re in the weeds working on nitty gritty details, while other times you need to see the big picture or strategize. The demands of work and life today often surpass our cognitive abilities — creating stress, anxiety, and lost productivity — which is why Copilot’s ability to free people’s creativity, energy, and time is so promising. By mapping the abilities of LLMs to different human cognitive needs and abilities, we came up with a three-part framework to empower full-spectrum productivity across and within apps: immersive, assistive, and embedded experiences.

Immersive experiences are geared towards those times when work spans multiple tools; requires deep, contextual understanding; and likely combines the need to create, collaborate, and/or comprehend. A full-screen experience free from the boundaries of pre-existing apps, plus the combined power of LLMs with your business data and context, allows you to uplevel your skills and unlock productivity. From creating drafts of pitch decks or business proposals to helping you prepare for the week’s most important meetings, Copilot will adapt to your needs across work and life.

Assistive and embedded experiences, by contrast, are for those times when you want to accelerate the speed and quality of work within specific apps. Cognitively, this is when you’re looking to do more singular types of work and need focus and support to be more creative in Word, more analytical in Excel, more expressive in PowerPoint, more productive in Outlook, or more collaborative in Teams. These show up as adaptive components or modules that scale from embedded experiences within a specific app, to wholly immersive experiences where you’re conversing with Copilot. From invocation to instruction, they bring consistency to the overall interaction of Copilot. They also always work within the flow of your current task by understanding the context of what’s on-screen and bringing familiar graphical capabilities to you.

In combination, these three altitudes of work support not just tasks but workflows across apps and platforms — which are notoriously hard to achieve in digital environments and collaborative, multi-player contexts. By meeting you wherever you’re at within the Microsoft 365 ecosystem, working across your business data to keep knowledge free-flowing across your organization, and automating mundane or repetitive tasks, you can stay in the flow.

A UX foundation designed to empower

When designing Copilot experiences, rooting every design and engineering decision within an ethical framework that prioritized human agency was essential for us. From the UI patterns to Copilot’s visual identity, we wanted to express these values. While we could easily write an entire blog post about each of these, here is an overview of some of the core foundational elements.

We had deep discussions about how much control versus how much guidance to give users. Do we completely obfuscate the AI and just give users a button that says “summarize”? Do we give them an open text field but with some suggestions? With a higher level of user control comes a higher level of user responsibility, so if we’re putting a customer behind the steering wheel, we can’t have the model hidden behind a button — it’s capabilities must be accessed and understood. That puts the onus on product makers to put sufficient boundaries on things like harmful use cases, but ultimately empowers customers far more than just, say, giving them a list of buttons that they can press that will perform certain capabilities.

In terms of the “how,” natural language is a great way to unlock the power of the model, but only if you grasp the nature and importance of turn-by-turn interactions. Until now, most tech products have been deterministic; the same core interactions happen in precise and repeatable ways. LLMs inch us toward probabilistic products that are imprecise and non-repeatable. It’s not even possible to hardcode the model’s responses because they unfold anew with each interaction. When designing conversational UX, we believe turn-by-turn interactions allow you to explore the model’s capabilities, while also steering it back toward intended use cases if needed.

Education is paramount

Zero-state design, fallibility notices, sharing intended use cases, prompt suggestions — we’re optimizing our designs to prioritize and promote education. Interacting with an LLM is brand new, fairly novel, and potentially intimidating, so we want you to have a clear overview of its capabilities and limitations from the very first time you enter the experience. That’s a vital part of building responsibly with AI; ethical principles are moot if they’re not baked into the actual UI. So, how do we use zero-state design (i.e., what a person sees on screen before interacting) to teach people about mistakes the model might make and the need to fact-check outputs? We’re also exploring an “AI badge” to go alongside all Copilot outputs that would allow you to learn more about the underlying tech being used anytime you tap or click on it.

Similarly, what the model puts out is only as good as the prompt that you type in, and prompt writing is a new skill that will take time to master. Longer, more detailed prompts tend to give you better results, which is why we created a prompt capabilities menu and include prompt suggestions with hints. Over time, as people get more familiar and comfortable with this technology, our designs will likely shift to emphasize that less but, for now, it’s critical.

Making it worth the wait

Expectations around the speed of technology have changed since the days of doing the dial-up internet dance and today, we often expect immediacy or darn near close to it. With LLMs, however, the sheer scale of the information being processed means that it sometimes takes longer to generate responses than we may expect. But if those extra seconds save you an hour, it’s worth it, and designers can use this wait time as a moment to create transparency, like how we used zero state to educate. This could include anything from dialogue boxes reminding people to fact-check the response, to sharing information about how the model generates the answers it does. We want to be thinking about how to use latency to reinforce learnings about the model’s limitations, in addition to ways to creatively infuse delight. How could we turn a moment of simply waiting into eager anticipation?

Creating intentional friction

When it comes to ensuring human agency, one of the biggest risks is creating overreliance on the model. Copilot isn’t an autopilot, it requires human oversight and collaboration because of its potential to generate inaccurate or wrong answers, but also because the most powerful results are created through back-and-forth interactions where tweaks are made during each turn. This becomes particularly critical when sharing Copilot results with others (say, a summary of OKRs from a certain fiscal year, an onboarding document, or an event recap). Copilot results link to citations and, in some cases, shares even more information on a source if you hover over it. There should still be human review of these, however, and as designers we can create intentional points of friction to help with this. Before someone goes to share something, for example, we can ask if you’ve fact checked it or whether there’s been any human oversight. Content designers have a significant role to play in interactions like this because how we word something can make or break whether someone pays attention to what’s being asked of them.

Communicating through a visual identity

From a visual perspective, we wanted to use things like color and iconography to create and reinforce an understanding of the AI moments across Microsoft products. Using product brand colors and vibrant accents makes the product come alive when specifically interacting with AI features, clearly indicating that the model is active during moments of latency and differentiating it from the surrounding UI. The visual identity also clearly shows when Copilot is in use and/or has produced content, our aim being to build trust by allowing you to lean into your judgment insofar as critiquing the output.

Moving forward with a learn-it-all mindset

Moments like the present are sacred spaces for product makers. There are only so many times when a technology comes along that truly changes the game and across all disciplines, we’re keenly aware of the power and impact of LLMs. And with Copilot, it’s not just about LLMs as a medium, but the ecosystem it lives within. Microsoft 365 is one of the most powerful productivity suites in the world; the breadth of its abilities are why governments, hospitals, and schools alike run on it. When you then situate the power of LLMs within it, there’s unique and remarkable potential to level up human abilities and support human needs.

This is an exciting, humbling, and significant responsibility for the design community, and the default settings, design tenets, and UX frameworks we choose matter deeply. Just as important are the processes we embrace around emergent technologies. New models could arise within the next six months to a year, and we need agile, flexible design and engineering processes that leave ample room to incorporate new research insights and customer feedback. That’s why we’re previewing these designs, as new as they are — to learn from enterprise customers in a safe preview environment. We can’t design behind closed doors, in a tech vacuum or bubble disconnected from everyday people. We must actively seek feedback, embrace a learn-it-all attitude, and share learnings by designing in the open. We must also always seek to root what we’re building in ethical considerations and universal human needs; the tech itself may change, but those remain far more durable.

And as for Microsoft Design, we’ll continue to share our learnings, updates, and designs as the year progresses, so stay tuned and please share your feedback openly and often!

Read more

To stay in the know with Microsoft Design, follow us on Twitter and Instagram, or join our Windows or Office Insider program. And if you are interested in working with us at Microsoft, head over to aka.ms/DesignCareers.

When AI joins the team: Three principles for responsible agent design

A practical guide to build agents that stay differentiated, intent-aligned, and bias-resistant

Outcomes over output: Designing shared cognition

How we are shaping systems that help people think better, not just type faster.

The new Microsoft 365 Copilot mobile experience

How we redesigned the Microsoft 365 Copilot mobile app to create a workspace built around conversation, dialogue, and discovery.