– The estimated reading time is 11 min.

AI-accelerated research: Speed and scale, without compromising rigor

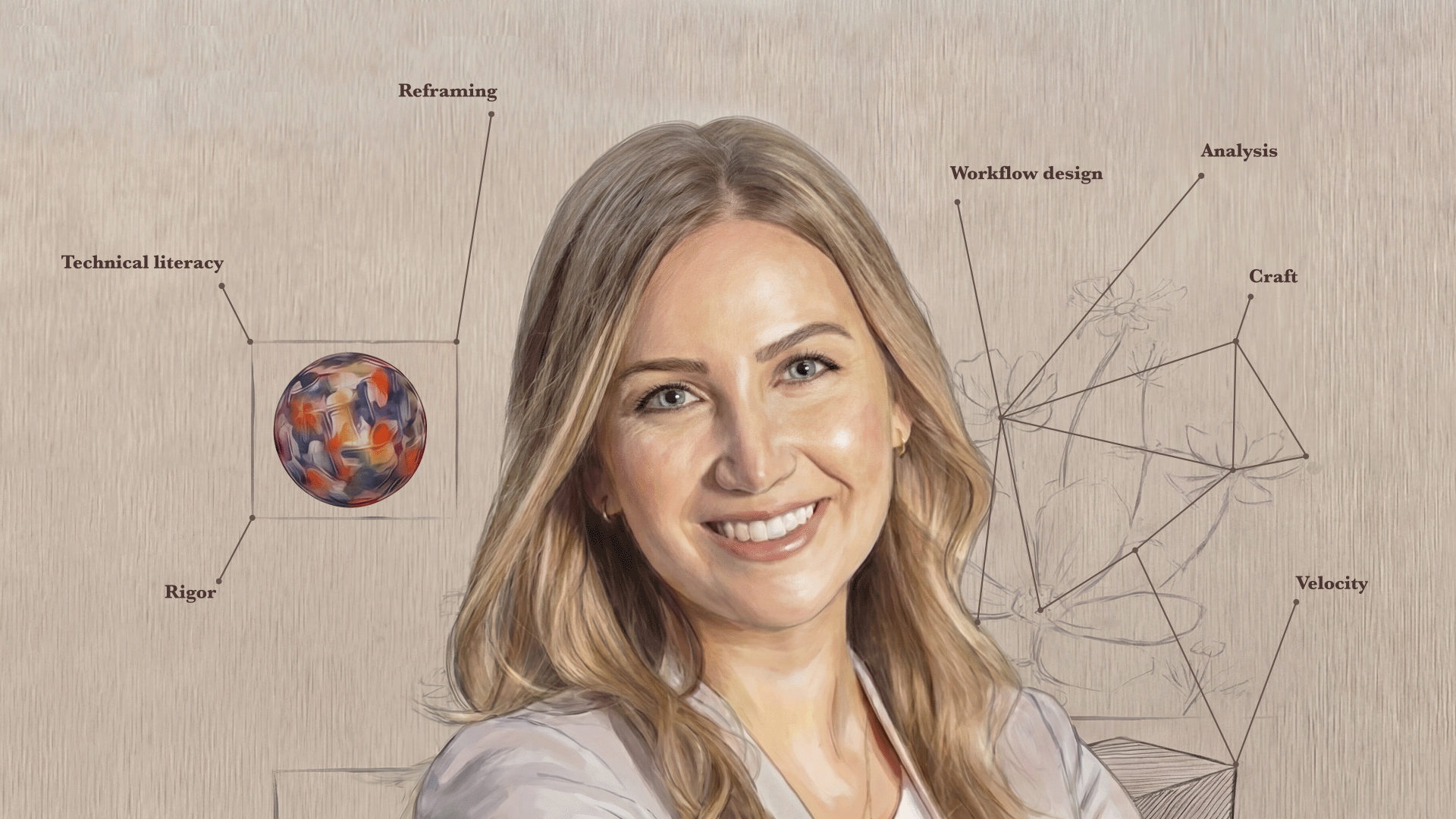

Welcome to another edition of Think First: Perspectives on Research with AI. Last time, we heard from Rodrigo Dalcin, who shared how he’s harnessing AI to streamline research operations in a fully democratized research model at Wealthsimple. This week, we’re featuring Savina Hawkins, who leads UX Research for Claude Code at Anthropic.

Savina is a seasoned mixed methods researcher and technologist whose career spans pioneering work at leading organizations such as Meta’s AI Lab, Google’s AI Lab, and Eventbrite. With a foundation in independently designed programs at Harvard and Stanford focused on AI’s societal impact and technical underpinnings, Savina brings a rare blend of qualitative, quantitative, and data science skills to her practice. In her current role at Claude Code, she leverages AI at every stage of the research journey, from study design and recruitment to analysis and synthesis. Her approach is defined by a commitment to human-AI collaboration, methodological innovation, and scaling research impact.

In our conversation, we explored how Savina integrates AI throughout the UX research process, pivotal moments in the evolution of AI tooling, present-day challenges and risks, and where she believes the industry is headed next.

From prototype hacks to thought partnership: Savina’s AI origin story

Savina’s first “this changes everything” moment arrived with GPT-3’s API in 2020, which unlocked rapid prototyping for non-engineers. Under the pressure of Eventbrite’s COVID-era pivot, she built scripts for classification and summarization that compressed weeks of research analysis into scalable automation.

I was able to actually take things that would have taken me weeks to analyze manually and automate them in a scalable way. And that really was the moment where I was like, ‘Oh my God, this is gonna completely change how I do my job, and it’s never gonna go back to the way it was before.’

Her second inflection point came with GPT-4 in March 2023: no longer limited to “low-intelligence” tasks, the models became an always-on thought partner—able to reframe ideas, provide feedback, and collaborate cognitively. Bringing analytical horsepower together with genuine conceptual support, she saw how AI would transform research workflows end-to-end.

“It felt like the first moment where the models weren’t just capable of low-intelligence tasks of classifying, putting things into buckets, very simple memorization or extraction, but it actually was good enough to be a thought partner with me…now we have the raw abilities to do methodological analysis, and we have cognitive capabilities to act as a thought partner to humans.

When you bring those two together, this is going to completely transform how we do research.

An AI-augmented week: Speed, scale, and impact

What would have previously taken Savina an entire quarter (or half) to do, she can now accomplish in a single week. She provided a rundown of her work over the past week to illustrate the scale of her impact:

“I took six research studies I had already done, gave it to Claude Code and provided some framing, then had it pull all of the supporting evidence that already existed and assemble a very simple one-pager for a new hire. I was able to package the insights up for her in a way that she was able to completely rewrite her entire strategy doc with the new foundational understanding of what the problems were. This all came together in like an hour or so…like Monday morning 9:00 AM, and by mid-morning she basically had already rewritten her doc. Being able to surface insights at the right time to the right person in the right format—huge unlock.”

Later that week, she ran a rapid analysis for a newly formed, non-technical team working on a highly technical product. She analyzed hundreds of sales calls with Claude Code, extracted resonant moments, and synthesized campaign inspiration for the team. She also enabled self-serve Q&A on a prior study’s dataset and auto-assembled clips from user research videos.

“They had some ideas for campaigns they wanted to run, but no fully formed strategy yet. So, I pulled all of our sales calls from November, and then I built a structured analysis in Claude Code that went through and read all of them, extracted specific moments where the user said something that really resonated, then synthesized those into inspiration for campaigns. The team was super excited to leverage that.”

During this same week, Savina ran a series of AI-moderated interviews to efficiently match customer insights to the team’s top strategic initiatives, directly connecting busy engineering teams with the most relevant user voices at the right moments.

“I’m doing a big ‘leading edge’ user study combined with a ‘designing for the margin’ study—pretty big study scope. And for this one, I wanted to do specialized recruiting. So, I ran AI-moderated interviews to basically figure out who would be most valuable for the team to talk to…If that wasn’t already enough, I launched 10 different user research studies all in one week.”

At this point, Savina leverages AI at all stages of the research process to support everything from outreach copy, stratified random sampling, protocol drafting, AI‑moderated interviews, analysis, synthesis, and deliverables—all resulting in “insane” productivity gains.

Designing human–agent collaboration (without sacrificing rigor)

Savina’s gains don’t come at the expense of quality. They come from intentionally learning technical foundations, like processing pipelines, systematic review, and human-in-the-loop systems, then designing the right collaboration paradigm between human and agent. She notes that much of the burden falls on prompt formulation; in the future, she anticipates that advanced workflows will be much more accessible to non-technical researchers.

In my experience, there’s no trade-off between rigor, quality, scale and speed, which is totally crazy. But the only reason I’m able to do that is I did the hard work of learning about technical concepts that otherwise would not have been on my radar. Now it’s just a matter of design catching up, making it so you don’t have to have the technical background, because right now that technical background goes into how I formulate prompts, and the prompts then are doing the heavy lifting. As soon as the technology catches up to put less of the burden on the human, it’ll be a lot easier to use.

Industry trends & tooling transparency

One industry pitfall that Savina has observed is overly confident opinions that are not necessarily grounded in technical reality—like evaluation protocols that ignore core parameters such as temperature, which controls randomness and determinism in LLM outputs. Savina notes that without controlling for temperature, conclusions about variability can be fundamentally misguided.

“I would say the thing that makes me cringe the most is listening to people who have very formed opinions but that are not grounded in a technical reality. Especially because a lot of them are using methodologies built to research humans and then applying it to AI. And there’s a little bit of a disconnect.”

She provided an example where a UX researcher applied a rigorous evaluation protocol to assess AI output quality but misinterpreted the results by overlooking key model mechanics—like temperature—mistaking expected model randomness for a design flaw.

On commercial tools, Savina values well-designed systems (e.g., Listen Labs for AI-moderated interviews) but warns against black-box packaging: researchers need visibility into methodology, parameterization (including temperature), prompts, pipeline order, and data handling.

“The issue I have with commercialized tools is often they’re very packaged together and they sell it to you saying that it’s always perfect. The system has no limitations. You don’t need to check the output. But there’s no peek under the hood…what’s the methodology? What is the temperature setting? What are the different prompts in the system? What order are they run against the data? What does the data pipeline look like? UX researchers maybe don’t have the vocabulary to ask these questions, and they don’t know they need to ask them. It’s a tough space because it really puts the burden on the individual to educate themselves.”

Looking ahead: Disruption and reframing the researcher’s role

Savina predicts job displacement and disruption as organizations gain concrete ways to measure AI-driven productivity. Decision-makers will soon have the numbers to rationalize headcount reductions—raising particular risk for UX research if the discipline doesn’t radically adapt.

Today, companies don’t have real ways to quantify productivity gains from AI. As we become better at explaining and measuring and articulating productivity gains from AI, and as the models get better, the productivity gains are just going to exponentially increase and also businesses will suddenly have the data that they need to make headcount reduction based off of concrete data as opposed to speculations about what the potential impact of AI is, which is where we’re at today.

Yet, she sees an alternate future: scaled research that drives product, design, marketing, and go-to-market by filling information gaps with confidence. In this reframing, AI becomes the IC, and the human researcher orchestrates multiple AI contributors toward a holistic vision—emotionally and practically redefining the role.

“One change that I think about, especially with the new Claude Opus 4.5 model that just came out, AI is so good. It is the researcher, it is the IC, and I’m managing multiple IC researchers to execute a holistic research vision. And that is very much not how we think about what a human researcher is today. Re-framing what your job is versus what the AI’s job is, it’s the next big step that needs to happen emotionally for the field, and hopefully it happens sooner rather than later, so we don’t end up seeing our role re-bundled into other things.”

Recommendations & resources

For researchers struggling to grasp the technical concepts behind AI tooling, there’s good news ahead: Savina is currently writing a book—Reimaging Research—which aims to translate technical AI concepts for non-technical researchers, curating exactly what you need (and what you can ignore) to operate effectively today. She’s also helping curate the Learners Conference, showcasing practitioners at the bleeding edge with deep workflow details and outcomes. Together, these resources build background knowledge, while demonstrating practical use cases.

“I’m really excited for both! The book is a way to go through the background information and get up to speed on the technical stuff. And then the Learners Conference is a way to see how other people are innovating their practices.”

Final thoughts

If you think Claude Code is “for engineers only,” Savina argues otherwise: through a simple terminal interface, it offers the best version of vibe coding—working with CSV files and data in ways that “surprise and amaze,” once you push past the surface. Her closing note: try it, and discover how accessible powerful workflows can be.

“Although it’s not optimized for non-engineer personas today, it is so much easier to use than you would think…if you can get yourself over that [technical] hump and actually try it, it will surprise and amaze you. It’s just great.”

And with the introduction of Anthropic’s Cowork solution, we may continue to see these capabilities expand to non-technical users—providing access to the same agentic architecture without the command-line interface.

As the boundaries between human ingenuity and AI capability continue to blur, Savina’s journey reminds us that the future of research belongs to those willing to reimagine their craft. By embracing technical fluency, demanding transparency from our tools, and reframing our roles as orchestrators of both human and agentic insight, we unlock new dimensions of speed, scale, and rigor.

Next week, Chelsey Fleming shares how she’s blending generative AI, creativity, and a strong human touch to reimagine research for the Gen Media team at Google Labs—don’t miss her unique perspective in the next installment of our series.